In this assortment of perspectives, Stanford HAI senior fellows offer a multidisciplinary discussion of what DeepSeek means for the sphere of artificial intelligence and society at large. On the Stanford Institute for Human-Centered AI (HAI), college are examining not merely the model’s technical advances but also the broader implications for academia, business, and society globally. Additionally, to enhance throughput and cover the overhead of all-to-all communication, we're also exploring processing two micro-batches with comparable computational workloads concurrently in the decoding stage. DeepSeek was based lower than two years in the past by the Chinese hedge fund High Flyer as a analysis lab dedicated to pursuing Artificial General Intelligence, or AGI. "In 1922, Qian Xuantong, a number one reformer in early Republican China, despondently noted that he was not even forty years previous, but his nerves had been exhausted due to the use of Chinese characters. DeepSeek’s choice to share the detailed recipe of R1 training and open weight fashions of varying size has profound implications, as this will possible escalate the pace of progress even further - we're about to witness a proliferation of new open-supply efforts replicating and enhancing R1. Second, the demonstration that clever engineering and algorithmic innovation can bring down the capital necessities for critical AI techniques implies that less well-capitalized efforts in academia (and elsewhere) might be able to compete and contribute in some types of system constructing.

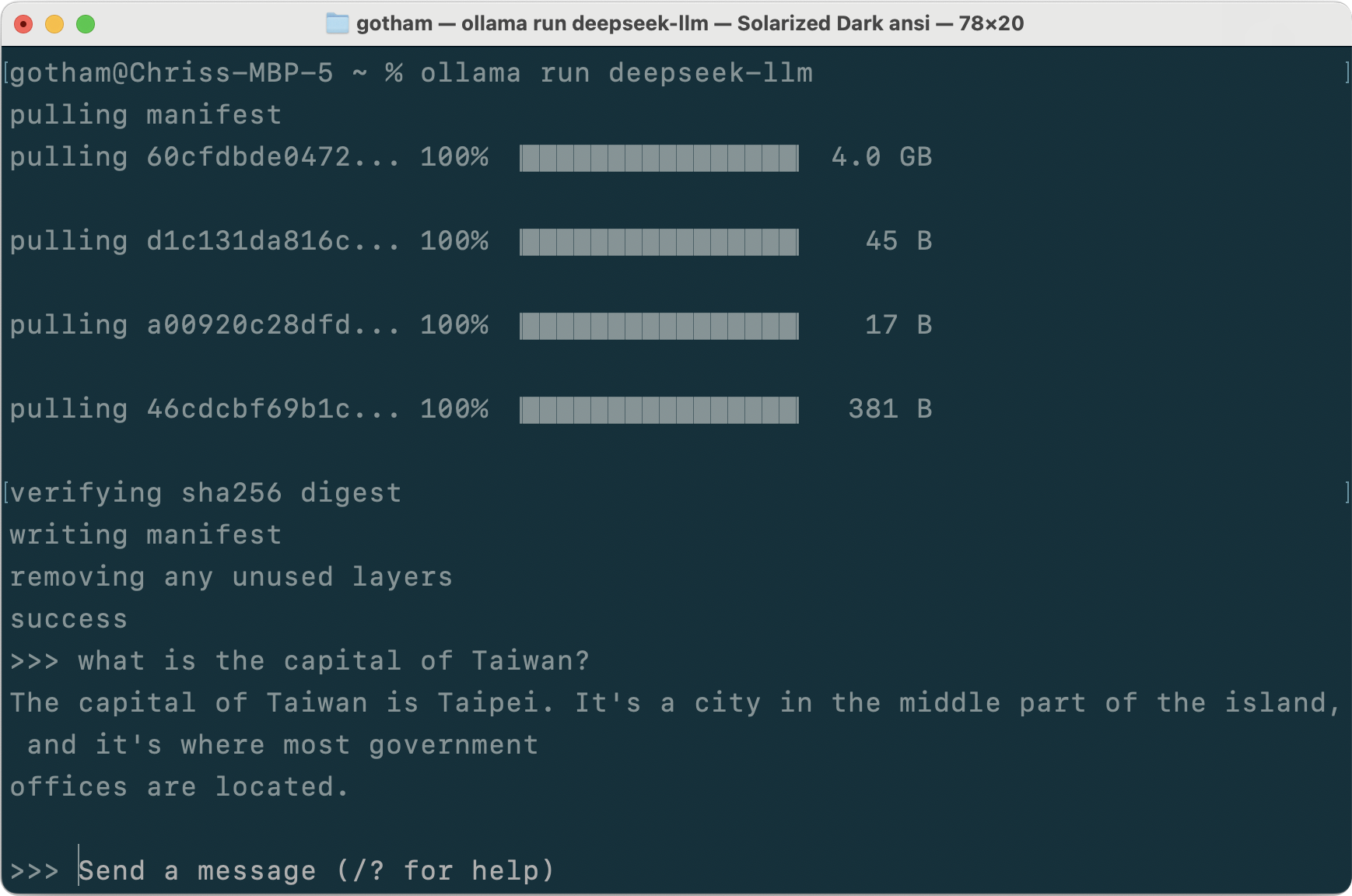

Taken collectively, we are able to now imagine non-trivial and related actual-world AI systems constructed by organizations with more modest resources. I’m now working on a version of the app utilizing Flutter to see if I can level a cell version at a neighborhood Ollama API URL to have comparable chats while deciding on from the same loaded fashions. Hence, I ended up sticking to Ollama to get one thing operating (for now). The "closed source" motion now has some challenges in justifying the method-in fact there continue to be reliable considerations (e.g., bad actors utilizing open-source models to do unhealthy issues), but even these are arguably best combated with open access to the instruments these actors are utilizing in order that folks in academia, trade, and authorities can collaborate and innovate in methods to mitigate their risks. With the mixing of Inflection-1 into Pi, users can now expertise the power of a personal AI, benefiting from its empathetic personality, usefulness, and safety standards. The Chinese mannequin can also be cheaper for customers. A new Chinese AI mannequin, created by the Hangzhou-based mostly startup DeepSeek, has stunned the American AI trade by outperforming some of OpenAI’s leading models, displacing ChatGPT at the top of the iOS app store, and usurping Meta because the leading purveyor of so-referred to as open supply AI instruments.

Taken collectively, we are able to now imagine non-trivial and related actual-world AI systems constructed by organizations with more modest resources. I’m now working on a version of the app utilizing Flutter to see if I can level a cell version at a neighborhood Ollama API URL to have comparable chats while deciding on from the same loaded fashions. Hence, I ended up sticking to Ollama to get one thing operating (for now). The "closed source" motion now has some challenges in justifying the method-in fact there continue to be reliable considerations (e.g., bad actors utilizing open-source models to do unhealthy issues), but even these are arguably best combated with open access to the instruments these actors are utilizing in order that folks in academia, trade, and authorities can collaborate and innovate in methods to mitigate their risks. With the mixing of Inflection-1 into Pi, users can now expertise the power of a personal AI, benefiting from its empathetic personality, usefulness, and safety standards. The Chinese mannequin can also be cheaper for customers. A new Chinese AI mannequin, created by the Hangzhou-based mostly startup DeepSeek, has stunned the American AI trade by outperforming some of OpenAI’s leading models, displacing ChatGPT at the top of the iOS app store, and usurping Meta because the leading purveyor of so-referred to as open supply AI instruments.

Some American AI researchers have forged doubt on DeepSeek’s claims about how a lot it spent, and what number of superior chips it deployed to create its model. Also word should you wouldn't have enough VRAM for the dimensions mannequin you're using, you could find using the model actually ends up using CPU and swap. Furthermore, we meticulously optimize the reminiscence footprint, making it potential to train Free Deepseek Online chat-V3 with out using expensive tensor parallelism. Update the coverage utilizing the GRPO goal. Coding and Mathematics Prowess Inflection-2.5 shines in coding and mathematics, demonstrating over a 10% enchancment on Inflection-1 on Big-Bench-Hard, a subset of difficult issues for large language models. 2k or 4k. That’s not loads of house, although it is likely to maintain growing over time. Here, we investigated the effect that the mannequin used to calculate Binoculars rating has on classification accuracy and the time taken to calculate the scores. Tauri, however I haven’t taken the time to wrap my head round that but. I also think that the WhatsApp API is paid for use, even in the developer mode.

Also, Sam Altman can you please drop the Voice Mode and GPT-5 soon? It should grow to be rather more fascinating when the AI can start to ask us the questions we often ask the clients or product owners, having the AI ask the developer those clarifying questions. But, I suspect it will want quite a bit bigger context capacity than at present out there before those kind of issues change into doable. The fact that DeepSeek Chat was released by a Chinese organization emphasizes the need to think strategically about regulatory measures and geopolitical implications within a world AI ecosystem the place not all players have the identical norms and where mechanisms like export controls shouldn't have the same influence. It lets me select and use whichever LLM I've loaded domestically and revisit these chat classes later. Chinese drop of the apparently (wildly) inexpensive, less compute-hungry, much less environmentally insulting DeepSeek AI chatbot, to date few have thought-about what this means for AI’s impact on the arts. But, truly, DeepSeek’s total opacity in terms of privateness protection, information sourcing and scraping, and NIL and copyright debates has an outsized influence on the arts. While the open weight mannequin and detailed technical paper is a step ahead for the open-source community, DeepSeek is noticeably opaque when it comes to privacy safety, data-sourcing, and copyright, adding to concerns about AI's impression on the arts, regulation, and national safety.

If you treasured this article and you simply would like to collect more info regarding Free DeepSeek Ai Chat generously visit the page.

댓글 달기 WYSIWYG 사용