Second, Jones urges companies to consider mental property rights when it comes to utilizing ChatGPT. "By defining possession, organisations can prevent disputes and unauthorised use of intellectual property. Organisations should be certain that the generated content material is discoverable and retained appropriately. Jake Moore, international cyber security advisor at ESET, concludes: "It have to be reminded that we're nonetheless in the very early phases of chatbots. Despite the security and authorized implications of using ChatGPT at work, AI technologies are still in their infancy and are here to remain. Hinchliffe says CISOs particularly involved about the info privateness implications of ChatGPT should consider implementing software program similar to a cloud access service broker (CASB). The implications for innovation - and competition - are staggering. "These examples were chosen as a result of they are comparatively unambiguous, so ChatGPT would not should infer much context past the code that it was given," they mentioned. They said that for one of the best outcomes, ChatGPT needs more consumer input to elicit a contextualised response as an example the code’s purpose. The initial tests involved looking at whether ChatGPT can uncover buffer overflows in code, which occurs when the code fails to allocate enough area to carry enter information.

Second, Jones urges companies to consider mental property rights when it comes to utilizing ChatGPT. "By defining possession, organisations can prevent disputes and unauthorised use of intellectual property. Organisations should be certain that the generated content material is discoverable and retained appropriately. Jake Moore, international cyber security advisor at ESET, concludes: "It have to be reminded that we're nonetheless in the very early phases of chatbots. Despite the security and authorized implications of using ChatGPT at work, AI technologies are still in their infancy and are here to remain. Hinchliffe says CISOs particularly involved about the info privateness implications of ChatGPT should consider implementing software program similar to a cloud access service broker (CASB). The implications for innovation - and competition - are staggering. "These examples were chosen as a result of they are comparatively unambiguous, so ChatGPT would not should infer much context past the code that it was given," they mentioned. They said that for one of the best outcomes, ChatGPT needs more consumer input to elicit a contextualised response as an example the code’s purpose. The initial tests involved looking at whether ChatGPT can uncover buffer overflows in code, which occurs when the code fails to allocate enough area to carry enter information.

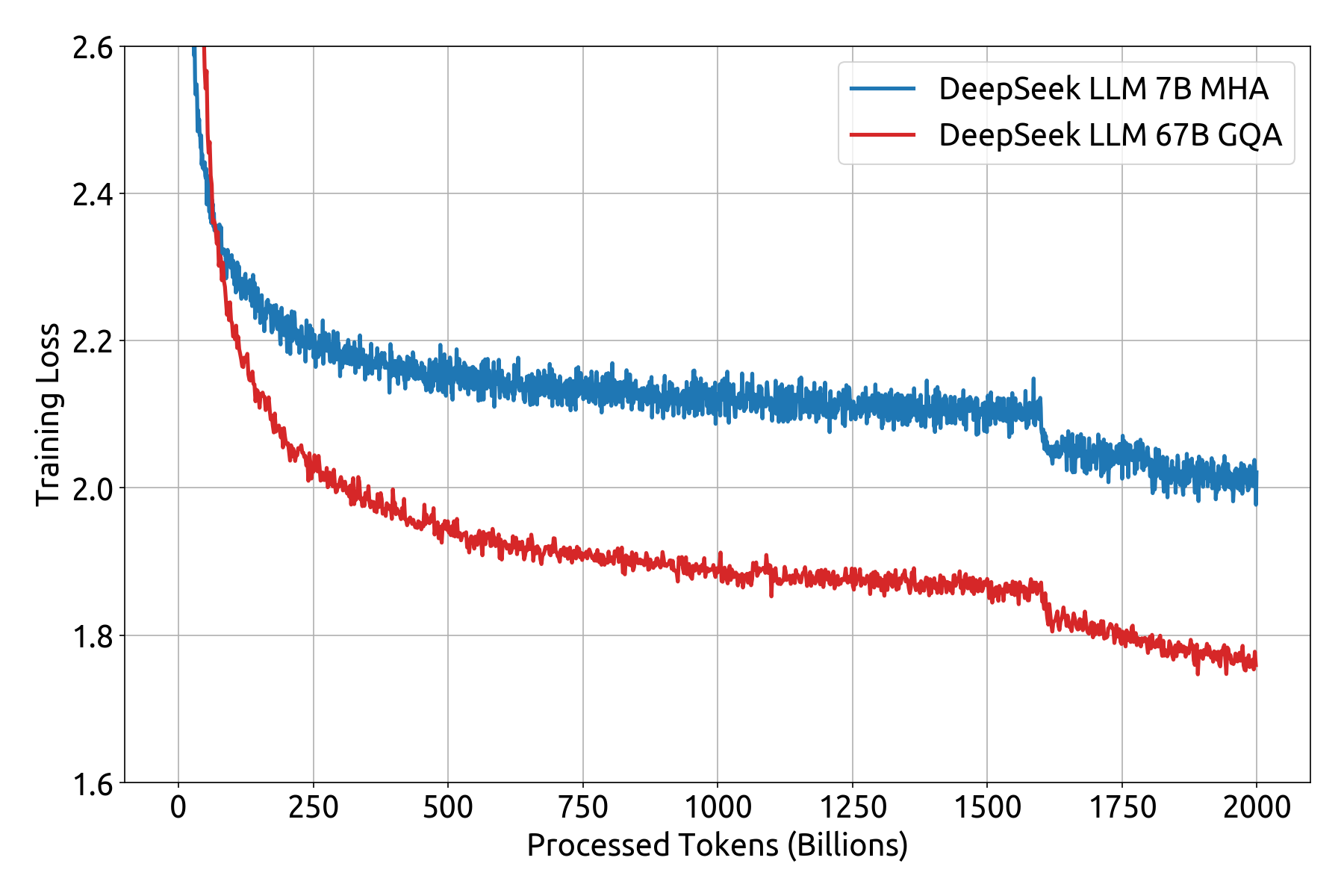

DeepSeek is a new AI mannequin that quickly turned a ChatGPT rival after its U.S. This needs to be a pink flag for U.S. The model itself was additionally reportedly much cheaper to build and is believed to have value round $5.5 million. This is significantly lower than the $a hundred million spent on training OpenAI's GPT-4. Sometimes I feel like I’m working down an alley throwing garbage cans behind me, and sadly, I’ve been coaching to run. Chimera: efficiently training massive-scale neural networks with bidirectional pipelines. Thacker adds: "Companies ought to realise that staff will likely be embracing generative AI integration providers from trusted enterprise platforms similar to Teams, Slack, Zoom and so forth. He adds: "In addition, organisations need to develop an approach to assessing the output of ChatGPT, ensuring that experienced people are within the loop to find out the validity of the outputs. While laws like the UK’s Data Protection and Digital Information Bill and the European Union's proposed AI Act are a step in the correct path concerning the regulation of software program like ChatGPT, Thacker says there are "currently few assurances about the way corporations whose merchandise use generative AI will course of and store data". Free DeepSeek online is a Chinese startup that develops open-supply AI fashions, similar to ChatGPT, which helped bring generative artificial intelligence to the mainstream.

There are safer ways to strive DeepSeek for both programmers and non-programmers alike. But Jones says there are several strategies businesses can adopt to deal with AI bias, reminiscent of holding audits repeatedly and monitoring the responses supplied by chatbots. However, he says there are a variety of steps that firms can take to make sure their staff use this technology responsibly and securely. Ultimately, it is the duty of security leaders to make sure that staff use AI tools safely and responsibly. Trustwave mentioned that while static evaluation instruments have been used for years to establish vulnerabilities in code, such instruments have limitations in terms of their means to assess broader safety points - generally reporting vulnerabilities which are unimaginable to use. The week after DeepSeek’s R1 release, the Bank of China announced its "AI Industry Development Action Plan," aiming to offer no less than 1 trillion yuan ($137 billion) over the subsequent 5 years to support Chinese AI infrastructure construct-outs and the event of functions ranging from robotics to the low-earth orbit economy. Regardless of the case may be, developers have taken to DeepSeek’s fashions, which aren’t open supply as the phrase is often understood but are available beneath permissive licenses that enable for industrial use.

"The key capabilities are having complete app utilization visibility for complete monitoring of all software program as a service (SaaS) utilization activity, including worker use of new and emerging generative AI apps that may put knowledge at risk," he provides. Something else to contemplate is the truth that AI instruments typically exhibit indicators of bias and discrimination, which may cause critical reputational and legal harm to companies utilizing this software program for customer support and hiring. Verschuren believes the creators of generative AI software ought to ensure data is just mined from "reputable, licensed and recurrently updated sources" to deal with misinformation. But provided that not every bit of web-based content material is correct, there’s a threat of apps like ChatGPT spreading misinformation. Researchers from Trustwave’s Spiderlabs have examined how well ChatGPT can analyse source code and its recommendations for making the code extra secure. "We offered ChatGPT with loads of code to see how it could reply," the researchers stated.

If you liked this article so you would like to get more info about deepseek français i implore you to visit our internet site.

댓글 달기 WYSIWYG 사용