Although the European Commission has pledged €750 million to construct and maintain AI-optimized supercomputers that startups can use to train their AI fashions, it's onerous to say whether or not they'll be able to generate revenue to justify the EU's preliminary funding, particularly since it's already a challenge for established AI corporations. Given the quantity of models, I’ve damaged them down by class. Altman acknowledged that mentioned regional differences in AI merchandise was inevitable, given present geopolitics, and that AI services would possible "operate in a different way in numerous countries". Inferencing refers back to the computing energy, electricity, information storage and other assets wanted to make AI fashions work in real time. Consequently, Chinese AI labs operate with more and more fewer computing assets than their U.S. The company has attracted attention in international AI circles after writing in a paper final month that the coaching of DeepSeek-V3 required less than US$6 million price of computing energy from Nvidia H800 chips.

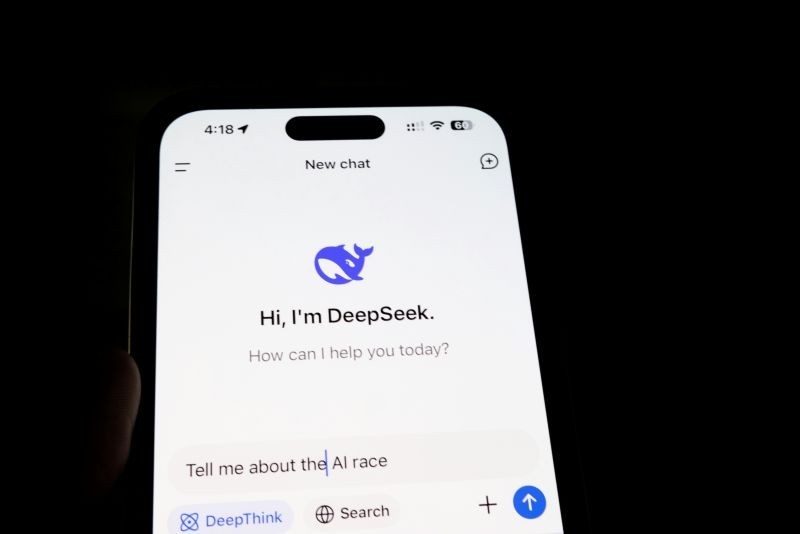

Free DeepSeek r1-R1, the AI mannequin from Chinese startup DeepSeek, soared to the highest of the charts of the most downloaded and energetic models on the AI open-source platform Hugging Face hours after its launch last week. Models at the top of the lists are those which are most interesting and a few models are filtered out for size of the issue. The mannequin is also one other feather in Mistral’s cap, as the French startup continues to compete with the world’s prime AI firms. Chinese AI startup DeepSeek AI has ushered in a new era in giant language fashions (LLMs) by debuting the DeepSeek LLM family. If Free Deepseek Online chat’s performance claims are true, it may show that the startup managed to build highly effective AI models regardless of strict US export controls stopping chipmakers like Nvidia from selling excessive-efficiency graphics playing cards in China. Nevertheless, if R1 has managed to do what DeepSeek says it has, then it may have a massive influence on the broader synthetic intelligence trade - especially within the United States, where AI investment is highest. In response to Phillip Walker, Customer Advocate CEO of Network Solutions Provider USA, DeepSeek’s mannequin was accelerated in growth by learning from previous AI pitfalls and challenges that other companies have endured.

Free DeepSeek r1-R1, the AI mannequin from Chinese startup DeepSeek, soared to the highest of the charts of the most downloaded and energetic models on the AI open-source platform Hugging Face hours after its launch last week. Models at the top of the lists are those which are most interesting and a few models are filtered out for size of the issue. The mannequin is also one other feather in Mistral’s cap, as the French startup continues to compete with the world’s prime AI firms. Chinese AI startup DeepSeek AI has ushered in a new era in giant language fashions (LLMs) by debuting the DeepSeek LLM family. If Free Deepseek Online chat’s performance claims are true, it may show that the startup managed to build highly effective AI models regardless of strict US export controls stopping chipmakers like Nvidia from selling excessive-efficiency graphics playing cards in China. Nevertheless, if R1 has managed to do what DeepSeek says it has, then it may have a massive influence on the broader synthetic intelligence trade - especially within the United States, where AI investment is highest. In response to Phillip Walker, Customer Advocate CEO of Network Solutions Provider USA, DeepSeek’s mannequin was accelerated in growth by learning from previous AI pitfalls and challenges that other companies have endured.

The progress made by DeepSeek is a testament to the rising influence of Chinese tech companies in the worldwide enviornment, and a reminder of the ever-evolving panorama of artificial intelligence improvement. In the weeks following the Lunar New Year, DeepSeek has shaken up the worldwide tech trade, igniting fierce competitors in artificial intelligence (AI). Many are speculating that DeepSeek really used a stash of illicit Nvidia H100 GPUs instead of the H800s, which are banned in China under U.S. To be clear, DeepSeek is sending your data to China. She is a extremely enthusiastic individual with a eager curiosity in Machine learning, Data science and AI and an avid reader of the most recent developments in these fields. Models developed by American firms will avoid answering sure questions too, but for the most part that is in the interest of safety and fairness slightly than outright censorship. And as a product of China, DeepSeek-R1 is subject to benchmarking by the government’s web regulator to ensure its responses embody so-called "core socialist values." Users have noticed that the mannequin won’t respond to questions in regards to the Tiananmen Square massacre, for instance, or the Uyghur detention camps. Once this data is out there, customers have no control over who gets a hold of it or how it's used.

Instead, customers are advised to use simpler zero-shot prompts - immediately specifying their intended output without examples - for higher outcomes. All of it begins with a "cold start" part, the place the underlying V3 model is okay-tuned on a small set of rigorously crafted CoT reasoning examples to enhance clarity and readability. Along with reasoning and logic-focused data, the mannequin is skilled on data from other domains to enhance its capabilities in writing, position-enjoying and more basic-goal duties. DeepSeek-R1 comes near matching the entire capabilities of those different models throughout numerous business benchmarks. One of many standout options of DeepSeek’s LLMs is the 67B Base version’s exceptional efficiency compared to the Llama2 70B Base, showcasing superior capabilities in reasoning, coding, mathematics, and Chinese comprehension. Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat - these open-supply fashions mark a notable stride ahead in language comprehension and versatile application.

If you cherished this post and you would like to obtain additional facts regarding Deepseek AI Online chat kindly go to our own webpage.

댓글 달기 WYSIWYG 사용