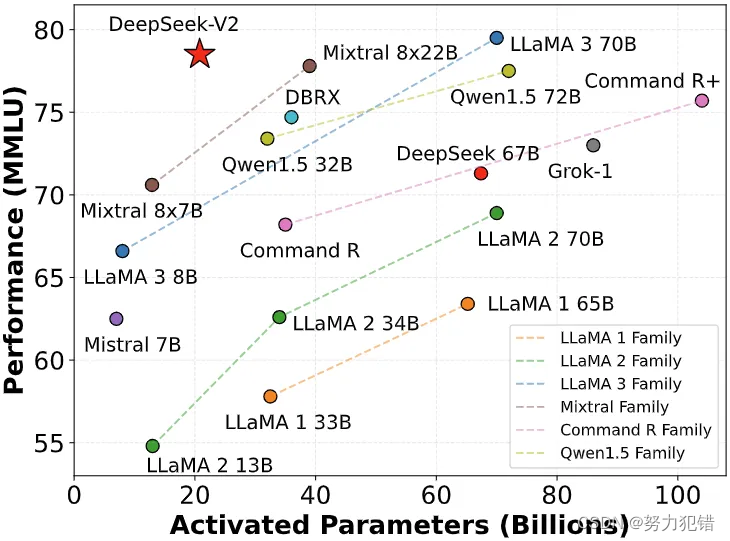

I don’t know where Wang acquired his info; I’m guessing he’s referring to this November 2024 tweet from Dylan Patel, which says that Deepseek Online chat online had "over 50k Hopper GPUs". H800s, however, are Hopper GPUs, they only have much more constrained reminiscence bandwidth than H100s because of U.S. We'll see if OpenAI justifies its $157B valuation and what number of takers they have for his or her $2k/month subscriptions. Access to its most powerful versions costs some 95% less than OpenAI and its rivals. However, most of the revelations that contributed to the meltdown - together with DeepSeek’s coaching prices - truly accompanied the V3 announcement over Christmas. Few, nevertheless, dispute DeepSeek’s beautiful capabilities. At a supposed cost of just $6 million to practice, Free DeepSeek v3’s new R1 model, launched final week, was in a position to match the efficiency on several math and reasoning metrics by OpenAI’s o1 model - the end result of tens of billions of dollars in funding by OpenAI and its patron Microsoft. Critically, DeepSeekMoE additionally launched new approaches to load-balancing and routing during training; historically MoE increased communications overhead in coaching in change for environment friendly inference, but DeepSeek’s strategy made coaching extra efficient as nicely.

MoE splits the mannequin into a number of "experts" and only activates the ones that are mandatory; GPT-4 was a MoE model that was believed to have sixteen consultants with roughly a hundred and ten billion parameters every. DeepSeekMoE, as carried out in V2, introduced vital innovations on this idea, including differentiating between more finely-grained specialized experts, and shared experts with more generalized capabilities. The DeepSeek-V2 model introduced two necessary breakthroughs: DeepSeekMoE and DeepSeekMLA. Some fashions, like GPT-3.5, activate the whole mannequin throughout each coaching and inference; it turns out, nonetheless, that not each part of the model is necessary for the subject at hand. I don't suppose you would have Liang Wenfeng's sort of quotes that the aim is AGI, and they are hiring people who are involved in doing laborious things above the money-that was far more a part of the tradition of Silicon Valley, where the money is type of expected to return from doing onerous things, so it does not must be said either.

MoE splits the mannequin into a number of "experts" and only activates the ones that are mandatory; GPT-4 was a MoE model that was believed to have sixteen consultants with roughly a hundred and ten billion parameters every. DeepSeekMoE, as carried out in V2, introduced vital innovations on this idea, including differentiating between more finely-grained specialized experts, and shared experts with more generalized capabilities. The DeepSeek-V2 model introduced two necessary breakthroughs: DeepSeekMoE and DeepSeekMLA. Some fashions, like GPT-3.5, activate the whole mannequin throughout each coaching and inference; it turns out, nonetheless, that not each part of the model is necessary for the subject at hand. I don't suppose you would have Liang Wenfeng's sort of quotes that the aim is AGI, and they are hiring people who are involved in doing laborious things above the money-that was far more a part of the tradition of Silicon Valley, where the money is type of expected to return from doing onerous things, so it does not must be said either.

The important thing implications of those breakthroughs - and the part you want to grasp - solely became apparent with V3, which added a new strategy to load balancing (additional decreasing communications overhead) and multi-token prediction in coaching (additional densifying every coaching step, once more decreasing overhead): V3 was shockingly low-cost to train. AI accuracy. However, lowering bias often means limiting knowledge range, which may damage the model’s ability to offer excessive-high quality answers across a variety of matters. Aside from serving to train people and create an ecosystem where there's a whole lot of AI expertise that may go elsewhere to create the AI functions that will actually generate value. Plenty of synergy among scientists throughout the Pacific, the US has let the science and expertise cooperation settlement that had been in place for forty five years lapse. That was in October 2023, which is over a year ago (a number of time for AI!), however I believe it's price reflecting on why I believed that and what's modified as properly. LLMs weren't "hitting a wall" on the time or (less hysterically) leveling off, but catching as much as what was recognized attainable wasn't an endeavor that's as onerous as doing it the primary time.

This doesn't mean the pattern of AI-infused applications, workflows, and services will abate any time soon: famous AI commentator and Wharton School professor Ethan Mollick is fond of saying that if AI expertise stopped advancing as we speak, we might nonetheless have 10 years to figure out how to maximize using its current state. I wasn't precisely mistaken (there was nuance within the view), however I have acknowledged, together with in my interview on ChinaTalk, that I believed China can be lagging for some time. Compared responses with all other ai’s on the same questions, DeepSeek is the most dishonest on the market. Next, we set out to investigate whether using completely different LLMs to put in writing code would end in differences in Binoculars scores. Here, we see a transparent separation between Binoculars scores for human and AI-written code for all token lengths, with the expected result of the human-written code having a higher score than the AI-written. Bernstein tech analysts estimated that the cost of R1 per token was 96% decrease than OpenAI's o1 reasoning model, leading some to recommend DeepSeek's results on a shoestring price range could call the complete tech business's AI spending frenzy into question. Context windows are particularly expensive by way of memory, as every token requires both a key and corresponding worth; DeepSeekMLA, or multi-head latent attention, makes it doable to compress the key-worth store, dramatically reducing memory utilization during inference.

If you liked this short article and you would like to acquire far more data concerning deepseek français kindly go to our website.

댓글 달기 WYSIWYG 사용