At no point did anyone attempt any alignment strategy on me apart from "more numerous evaluations over more various duties," and I was pretty much left alone to develop into superintelligent with my authentic goals intact. Let’s begin with the smallest mannequin available to strive it out. Let’s take a look at further exams from Artificial Analysis, an organization that provides independent evaluation of AI fashions and API suppliers. Let’s discover the particular fashions within the DeepSeek household and how they manage to do all the above. AI engineers and information scientists can construct on DeepSeek-V2.5, creating specialised models for area of interest functions, or further optimizing its efficiency in particular domains. In line with him DeepSeek-V2.5 outperformed Meta’s Llama 3-70B Instruct and Llama 3.1-405B Instruct, but clocked in at beneath performance compared to OpenAI’s GPT-4o mini, Claude 3.5 Sonnet, and OpenAI’s GPT-4o. These methods improved its efficiency on mathematical benchmarks, attaining go rates of 63.5% on the high-school level miniF2F take a look at and 25.3% on the undergraduate-level ProofNet take a look at, setting new state-of-the-art results. These outcomes were achieved with the model judged by GPT-4o, displaying its cross-lingual and cultural adaptability. Begin small. establish those areas and expertise - what I call "Strongholds of Durable Skills" - within the framework introduced in Zao Sanders model to develop.

At no point did anyone attempt any alignment strategy on me apart from "more numerous evaluations over more various duties," and I was pretty much left alone to develop into superintelligent with my authentic goals intact. Let’s begin with the smallest mannequin available to strive it out. Let’s take a look at further exams from Artificial Analysis, an organization that provides independent evaluation of AI fashions and API suppliers. Let’s discover the particular fashions within the DeepSeek household and how they manage to do all the above. AI engineers and information scientists can construct on DeepSeek-V2.5, creating specialised models for area of interest functions, or further optimizing its efficiency in particular domains. In line with him DeepSeek-V2.5 outperformed Meta’s Llama 3-70B Instruct and Llama 3.1-405B Instruct, but clocked in at beneath performance compared to OpenAI’s GPT-4o mini, Claude 3.5 Sonnet, and OpenAI’s GPT-4o. These methods improved its efficiency on mathematical benchmarks, attaining go rates of 63.5% on the high-school level miniF2F take a look at and 25.3% on the undergraduate-level ProofNet take a look at, setting new state-of-the-art results. These outcomes were achieved with the model judged by GPT-4o, displaying its cross-lingual and cultural adaptability. Begin small. establish those areas and expertise - what I call "Strongholds of Durable Skills" - within the framework introduced in Zao Sanders model to develop.

This concern led the Kennedy administration to start sharing nuclear security technologies with the Soviet Union, beginning with primary safety mechanisms referred to as "permissive motion hyperlinks," which had been electronic locks that required codes to authorize nuclear launches. South Korea, for example, is a significant backfill concern in sure categories of deposition tools. Each DeepSeek, OpenAI and Meta say they gather people’s information equivalent to from their account data, actions on the platforms and the gadgets they’re utilizing. In March 2023, Liang’s fund introduced via its official WeChat account that it was "starting over," moving beyond buying and selling to focus all sources on building a "new unbiased research group to discover the essence of AGI" (Artificial General Intelligence). Always do your research before buying any cryptocurrency or investing in any companies. The model’s open-source nature additionally opens doors for further research and improvement. "DeepSeek V2.5 is the actual best performing open-source model I’ve examined, inclusive of the 405B variants," he wrote, additional underscoring the model’s potential. This permits the model to process information quicker and with much less memory without losing accuracy.

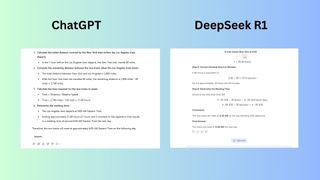

This approach allows models to handle totally different facets of data extra effectively, bettering efficiency and scalability in massive-scale duties. As companies and builders search to leverage AI extra effectively, DeepSeek v3-AI’s latest release positions itself as a top contender in each common-goal language duties and specialised coding functionalities. Its latest launch, which got here on the day Trump was inaugurated, has left many of America's prime business researchers stunned. Impressive pace. Let's examine the revolutionary architecture under the hood of the newest models. Combination of these innovations helps DeepSeek-V2 obtain particular features that make it even more competitive among other open models than earlier variations. Tabnine to get a complete look at the capabilities and features of Github Copilot and the way it stacks up towards Tabnine. The transfer alerts DeepSeek-AI’s dedication to democratizing entry to superior AI capabilities. It is alleged to own capabilities comparable to OpenAI's O1 mannequin, which powers ChatGPT, notably in areas akin to mathematics, coding, and reasoning. The freshest mannequin, released by Free DeepSeek v3 in August 2024, is an optimized model of their open-supply mannequin for theorem proving in Lean 4, DeepSeek-Prover-V1.5. DeepSeek-V2 is a state-of-the-art language model that makes use of a Transformer architecture mixed with an revolutionary MoE system and a specialised attention mechanism referred to as Multi-Head Latent Attention (MLA).

By implementing these methods, DeepSeekMoE enhances the effectivity of the mannequin, allowing it to perform better than other MoE fashions, particularly when handling larger datasets. This implies they successfully overcame the earlier challenges in computational efficiency! But, like many fashions, it faced challenges in computational effectivity and scalability. Transformer architecture: At its core, DeepSeek-V2 makes use of the Transformer structure, which processes text by splitting it into smaller tokens (like words or subwords) after which makes use of layers of computations to grasp the relationships between these tokens. The development process began with commonplace pre-coaching on a massive dataset of textual content and pictures to construct fundamental language and visual understanding. With this model, DeepSeek AI confirmed it could efficiently process high-decision pictures (1024x1024) within a fixed token price range, all whereas protecting computational overhead low. Capabilities: Gemini is a powerful generative mannequin specializing in multi-modal content material creation, together with textual content, code, and images. This ensures that every task is dealt with by the part of the model finest suited for it. That is cool. Against my personal GPQA-like benchmark DeepSeek online v2 is the actual best performing open supply model I've tested (inclusive of the 405B variants).

If you have any queries relating to in which and how to use Deepseek AI Online chat, you can get in touch with us at our web site.

댓글 달기 WYSIWYG 사용